Fist Host Robots.txt Generator tool is designed to help webmasters, SEOs, and marketers generate their robots.txt files without a lot of technical knowledge. Please be careful though, as creating your robots.txt file can have a significant impact on Google being able to access your website, whether it is built on WordPress or another CMS. Although our tool is straightforward to use, we would suggest you familiarize yourself with Google’s instructions before using it. This is because incorrect implementation can lead to search engines like Google being unable to crawl critical pages on your site or even your entire domain, which can very negatively impact your SEO. Let’s delve into some of the features that our online Robots.txt Generator provides.

The Robots.txt has a proper format which should be kept in mind. If any mistake in the format is made, the search robots won’t perform any task. Below is a format for a robots.txt file:

User-agent: [user-agent name]

Disallow: [URL string not to be crawled]

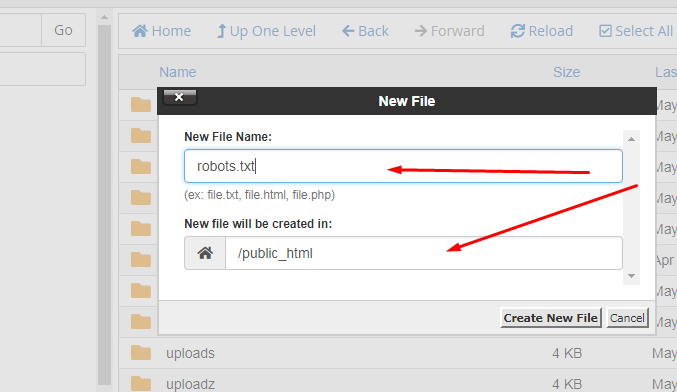

Just keep in mind that the file should be made in text format.

Custom robots.txt generator for blogger is a tool which helps in the webmasters to protect their websites’ confidential data to be indexed in the search engines. In other words it helps in generating the robots.txt file. It has made the lives of websites owner quite easy as they don’t have to create the whole robots.txt file by their own self. They can easily make the file by using the below steps:

By using these easy steps you can easily create a robots.txt file for your website.

If you already have a robots.txt file then in order to maintain proper security of your files you have to create a proper optimized robots.txt file with no errors. The Robots.txt file should be properly examined. For a robots.txt file to be optimized for search engines you have to clearly decide what should come with the allow tag and what should come with a disallow tag. Image folder, Content folder, etc. should come with the Allow tag if you want to your data to be accessed by search engines and other people. And for the Disallow tag should come with folders like, Duplicate webpages, Duplicate content, duplicate folders, archive folders, etc.

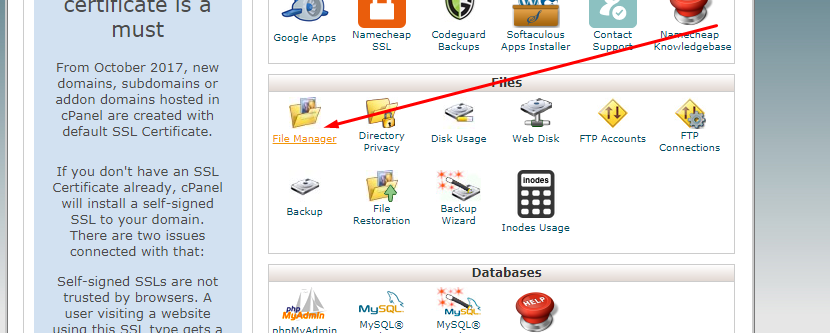

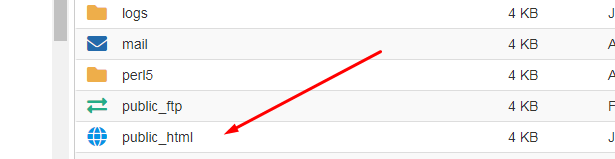

Although it is not required to create a Robots.txt file in WordPress. But in order to achieve higher SEO you are required to create a robots.txt file so that the standards are maintained. You can easily create a WordPress robots.txt file to disallow search engines to access some of your data by following the steps below:

User-agent: *

Disallow: /admin/

Disallow: /admin/*?*

Disallow: /admin/*?

Disallow: /blog/*?*

Disallow: /blog/*?

If you have a sitemap, add its URL as:

“sitemap: http://www.yoursite.com/sitemap.xml”

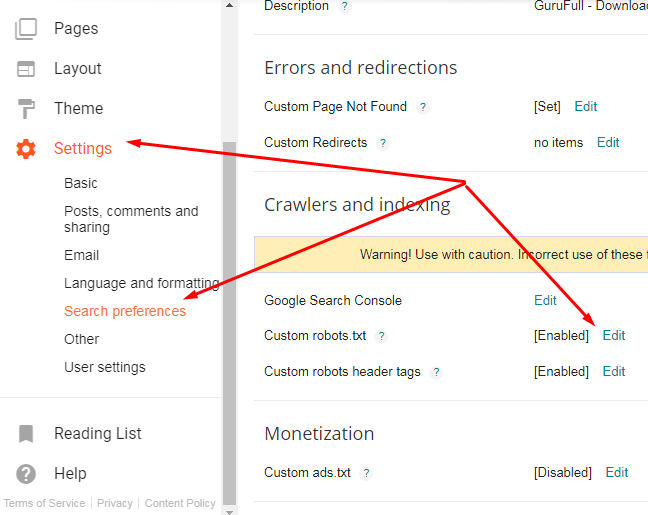

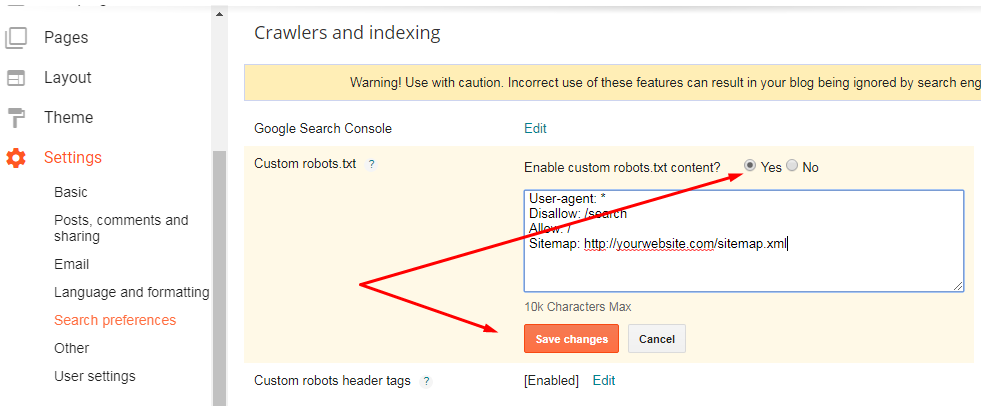

Since blogger has a robots.txt file in its system, therefore, you don’t have to hassle so much about it. But, some of its functions are not enough. For this you can easily alter the robots.txt file in blogger according to your needs by following the below steps:

4. From this go to the “Custom robots.txt” tab and click on edit and then “Yes”.

5. After that paste your Robots.txt file there to add more restrictions to the blog. You can also use a custom robots.txt blogger generator.

6. Then save the setting and you are done.

Following are some robots.txt templates:

User-agent: *

Disallow:

OR

User-agent: *

Allow: /

User-agent: *

Disallow: /

User-agent: *

Disallow: /folder/

As an added bonus our robots.txt generator includes a block against many unwanted spiders, or SPAM-bots, which generally crawl your website to collect the email addresses which are stored on those pages.